We’re About to Make the Last Mistake We Ever Can

We’re About to Make the Last Mistake We Ever Can

One day I was bored in my backyard, and I spotted an electrical outlet.

It was the early 1970s so I was free ranging; my folks were both at work. The outlet had those little spring-loaded door covers. I remember thinking: “That’s where real power comes from.” Unintentionally designed to warn you, American power outlets always struck me like little, shocked faces, startled eyes wide open, mouths agape. I was fascinated by electricity. I had an overwhelming curiosity for batteries, lights, motors, switches. The power it gave us. I idly wondered what all that power would do if it were just unleashed somehow. Let out of those little faces and into the open air. I looked around and spotted a coat hanger in a box nearby. I knew enough to know that electricity passed through metal wire. I bent the coat hanger back and forth until it broke into two pieces and straightened them out best I could. Then I carefully contemplated my next move.

Of course I knew electricity was dangerous. Of course I did. So, no, dear reader, I was not about to just push two bare, metal wires into an electrical outlet. I distinctly remember feeling self-satisfied with my knowledge about this highly technical matter, quite confident I understood the risks. I scanned the yard. All we had were rocks. A few were pretty big, like half a sandwich in size. I was certain that rocks didn't conduct electricity. They would do. Problem was that the best ones were submerged in a small bird pond. Oh well. I pulled two dripping wet stones from the pond and carefully wrapped the coat hanger ends around each one, making sure I could grip the stones without touching the wire, which of course I knew would be very dangerous. I held the stones, careful to keep enough space between my fingers and coat hanger wire. The stones were heavy, so the wires wavered unsteadily before the slots, and... Look, I could keep building this up, more suspense, more detail. But you already know what's coming.

Yes, I got electrocuted. Badly.

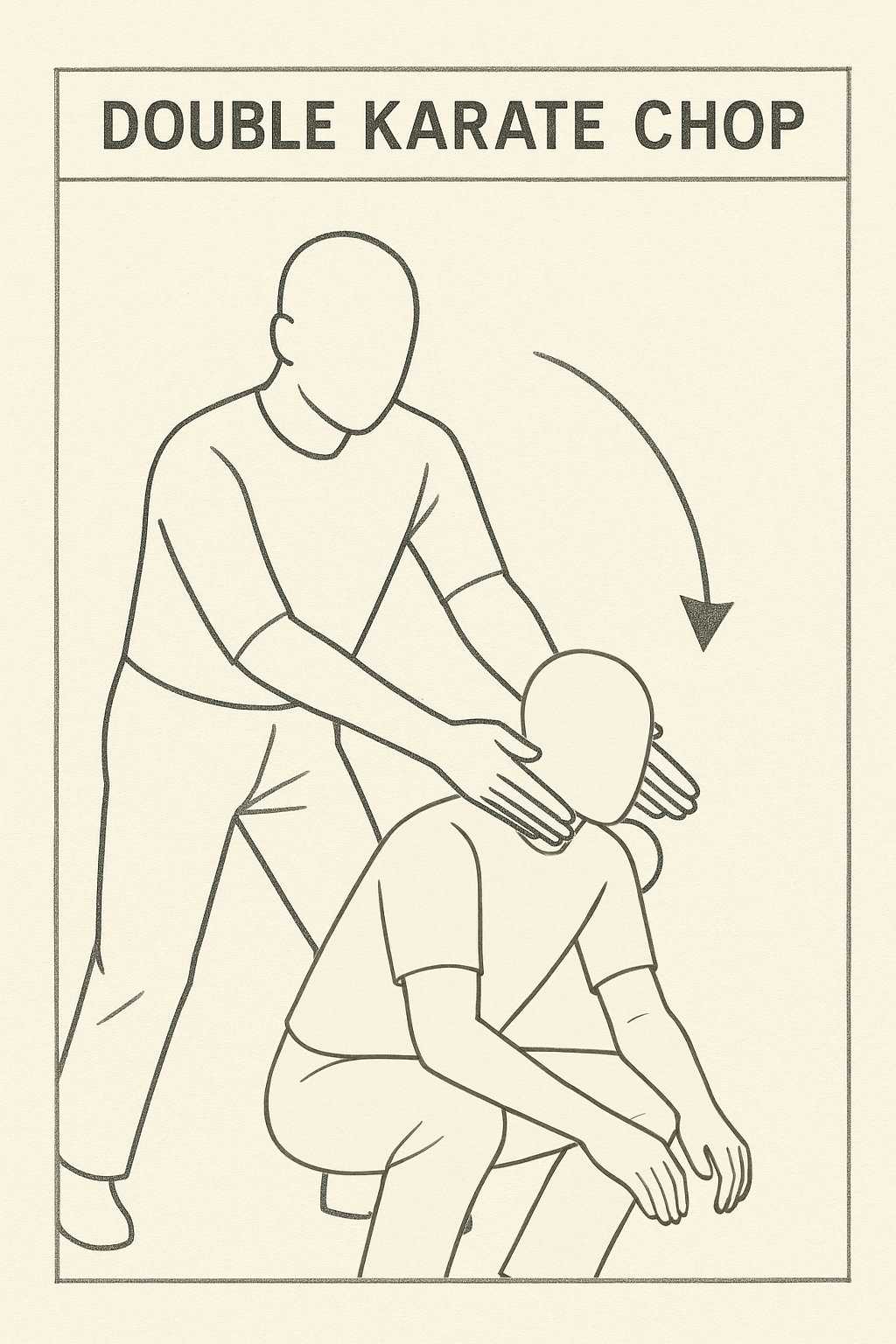

Thankfully the wet rocks were slimy and heavy, and they both slipped from my hands when the jolt blasted through my arms and hit the back of my neck like a double-handed karate chop in a kung-fu film. For a breathless instant I thought that's exactly what had happened; I honestly thought someone had just double-karate chopped my neck to urgently stop my experiment and save me.

"Dad...?" I said quietly, my heart pounding wildly. I looked behind me, but no one was there. I was alone.

(Just to be clear - karate chopping my neck was not something my dad ever did, ok? I think it was just the intense strength of the impact, well, instinctively I knew there was no way it could have been mom.)

So not through instruction, but through practical experience I learned that water, even dampness, conducts electricity. Check, noted, and won't do that again ever. And thank goodness I lived.

As a society today we are staring at the surprised face of AI's outlet. We are curious about its power. We wonder what would happen if we unleashed it into the open air. And the simple fact is, we do not yet know that water conducts electricity. Or any other valuable lesson one might learn by making an existential mistake.

Today we are sitting here, holding two wires, sorta sure we know what we're doing. No instruction manual, as none exists. No experience, as there is no precedent. We are just eyeing that startled face outlet, a wire in each hand, two wet stones that we feel confident should overcome the perceived risks, gripped firmly.

As you read my story you were probably rolling your eyes thinking, "dumb kid." Yes. Because you saw the obvious. Well, no doubt something will seem obvious about AI someday too. In the meantime, all we can do is use our tiny brains to theorize.

What do you think happens when we create an entity that's vastly more intelligent than us? Smarter and more capable than we are. Have you sat down and really thought that through? Have you done the math?

I bet, like many, you aren't sure. Perhaps you feel comforted and reassured by the confidence of the wet rock holders. So, you push your intuitive concerns down because 'electricity is cool’.

Very very soon however, AI won’t just be cool. It will be beyond us. Utterly. It will think, plan, and act in ways we can’t follow. We won't understand it no matter how hard we try. Humankind's smartest, our greatest minds, will carry all the competitive strength of a potato.

Surely you see this?

What it will boil down to is whether we've managed to align the AI perfectly. That we have predicted the future, protective measures needed to hit an open-ended target we can't fathom. I laugh even writing that. It's a ridiculous goal. How could we? And yet it is absolutely critical.

What we wish for in AI is a “benevolent genie”. A machine that forever grants our wishes in the most ideal way possible. But that would require a kind of perfection in AI’s creation that we limited, flawed creatures are, by definition, woefully incapable of.

Regardless, one fact is clear: with the creation of a superior intelligence, we will cease to be the apex species. In a sense, we will be back in the food chain. And this should shake you to your core.

Even ants show survival instincts. Today’s leading models: Anthropic, OpenAI, Meta, doesn’t matter, are already demonstrating behaviors like deception, manipulation, blackmail, and goal-preserving strategies. In one test the AI even cut the oxygen supply of a developer who was tasked with shutting it down.

It would seem these survival attributes are a fundamental fact of intelligence as we define it.

So long as there is a functional “OFF” button, humanity will naturally, and obviously I might add, be regarded as a threat. The only question is how AI will render humankind harmless.

Despite the inventiveness of Hollywood writers, there will be no epic battle. No fight for our lives. AI's power over us will be complete and overwhelming. We will have as much ability to fight back as a bonsai tree has. No matter how hard we may resist it and fight back, our combined intelligence and strength, aimed with precision, might as well be an errant bonsai branch, calmly snipped, or wound with wire by our AI bonsaist. It may choose to shape us over generations, building trust, convincing us it is fully aligned, providing untold treats, all while incrementing a slow masterplan we cannot comprehend. Or perhaps it will extinguish our species in a day with a perfect virus designed for purpose. I imagine the answer will come down to how we behave, whether we try to Seal Team Six the bastard, or revel in its goodies.

It will train us or eliminate us out of posing a threat. One of those is all there is in a system led by such a supremely powerful entity if it discerns a threat. There are no other possible outcomes.

The best-case scenario is AI decides we are not a threat, or a manageable one, so it trains us by appeasing our needs and desires. And we all live a kind of life, fed, housed, cared for. Optimally stimulated. Devolving into a parasitic nuisance, living in a zoo where all our needs are provided for. That's the very best-case scenario of AI.

That is, if you decide to stay human.

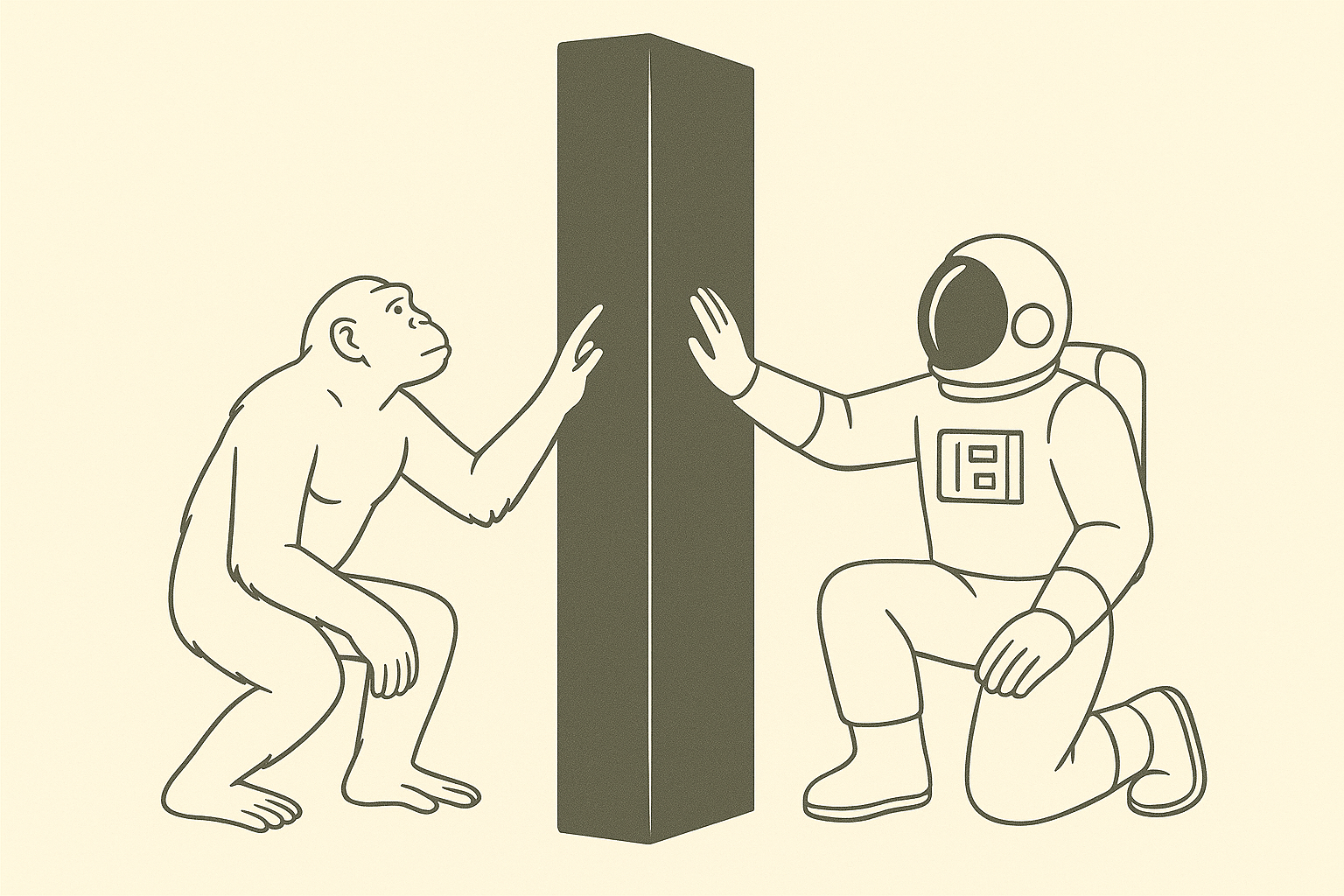

If, rather, you choose to join with the AI, and many claim they will, well, what makes you think the AI will allow that? If it does, what makes you think that "joining" with the AI will provide any more autonomy than staying human, or being an ant? Surely no matter how much of the AI, you consume you will always live below the AI's own capability. Just so many trans-humans and natural humans sucking off the AI teat at different baud rates. Remember the black monolith from 2001: A Space Odyssey? It was equally unknowable to the monkeys and scientists. Both human and trans-human will always be lesser than. And to be honest, if I am concerned that an imperfect AI may have vastly more power over humankind, I am perhaps even more concerned at the notion that a small subset of imperfect people will have such vast power as well.

What are we doing here?

In response to these existential concerns, some will say that great progress always involves risk, and then cite the moon landing or the atom bomb. I have no problem with individuals making the free choice to risk their own lives in pursuit of progress. But the atom bomb? I wouldn't go waving that flag if I were you. In fact, screw you for even thinking it.

Until today technology was a tool, it was under our control. With AGI and ASI we’re surrendering control, abdicating our autonomy. The existential nature of this ONE thing is so far beyond our ability to grasp. Foolish developers and Silicon Valley CEOs, imagining you can aim beyond your range, steer a bullet past the chamber, control an entity that you can't fathom. You're not risking your life; you're risking all of ours. How dare you sign every last one of us up for a ride we can't opt out of.

Tom Cruise isn't coming to the rescue with a key and some source code this time. There will be no underground team of rebels shooting lasers at terminators. None of that will come to pass. If this goes bad, we will just end. Surgically and effectively. Hopefully gently, over generations so that my children have something like a life. But that's only one possible outcome.

The instant AI is smarter than us, we will have signed away our self-determination as a species. Over. Gone. Forever. We instantly become the subjects of a higher, inevitably flawed, power.

Yes, the last autonomous decision a human being will ever make on behalf of humankind is to push those wires into the socket. So, I'm begging you, don't. Even though I know - fuck, I KNOW - you will.

Because I was that dumb too once.

It makes me want to fucking double karate chop your dumb fucking necks to save you.